Blog

October 19, 2020

What is Throughput in JMeter?

Back to topThroughput is a measure of how many units of work are being processed. In the case of load testing, this is usually hits per second, also known as requests per second. Understanding throughput helps teams develop test cases based on expected user engagement, such as peak traffic events.

Throughput vs. Concurrent Users

Concurrent users are the number of users engaged with the app or site at a given time. They’re all in the middle of some kind of session, but they are all doing different things.

Perhaps the easiest way to think about it is as a room full of people with laptops and devices. You are the moderator for a group exercise. You ask each person to start browsing the site. You may even assign subgroups, some to buy an item, others to simply surf around. You then say “Ready, Set, Go!” and they’re off and running. Walking around, you can see that each of them is at a different page. Some are reading content, others are clicking around, and still others have stopped to take a call on their phone. One guy actually fell asleep!

These are concurrent users.

But if you want a measure of activity, you need to look at how many requests are being sent to the servers over time. That’s throughput.

Back to topHow Throughput in JMeter Works

Understanding throughput is fairly straightforward. Tests are sending a certain number of HTTP requests to the servers, which are processing them. This total is then calculated in one-second intervals. To keep the math simple, if a server receives 60 requests over the course of one minute, the throughput is one request per second. If it receives 120 requests in one minute, it’s throughput is two requests per second. And so on.

One way you can understand throughput in JMeter is by likening it to a conveyor belt in a factory. One widget at a time is passed through a machine that stamps it with a label. If the machine stamps sixty widgets in one minute, it’s throughput is one widget per second.

Concurrent users are a different way of looking at application traffic. In planning our load tests, our goal is often to measure whether or not our application (not just the web server, but the entire stack) can handle an expected amount of traffic. Among the easier variables for us to work with is how many users we expect to have visiting the site and interacting with various pages, or using an app from mobile devices.

We might get these numbers from tracking tools like Google Analytics or MixPanel or KISSmetrics. We might also get them straight from machine logs. Whatever the case, we generally find it more useful to think of the number of people engaging with the site, not machine requests, when we’re planning capacity and designing our load test.

It’s an easier conversation for all stakeholders involved if we talk about the coming rush of Christmas shoppers or the expected number of new students registering, than if we talk about how many GET or POST requests might be coming in every second.

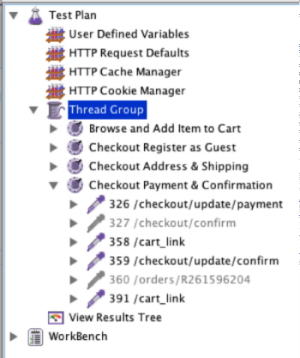

That usually leads us to develop our test cases based on the idea of a user or visitor. We create a script in a tool like Apache JMeter that mirrors what we expect users to do, such as navigating through a retail site, searching for an item, choosing one and buying it, registering for an account, editing profile preferences, and so on.

Scripting like this is a good practice since it exercises the same components throughout the stack that will be hit with actual production traffic. The scripts then act as “virtual copies” of a real user, doing the same things with the system under test that those real users do.

We may expect to have 500 users on the site at any given point, and we want to test the site’s behavior when 500 users are doing things at more or less the same time. But humans visiting our site don’t act in a synchronized way, of course. At any given point, some people will be searching, others will be reading an item’s description, still others will be updating their profile pic, and maybe some will stop for a minute to check their email and will return to the site after that pause.

We would say that these are 500 concurrent users, but not making 500 concurrent requests. The hits per second that those concurrent users generate will only be based on their actual interactions with the app, when they click a button or a link or submit a form.

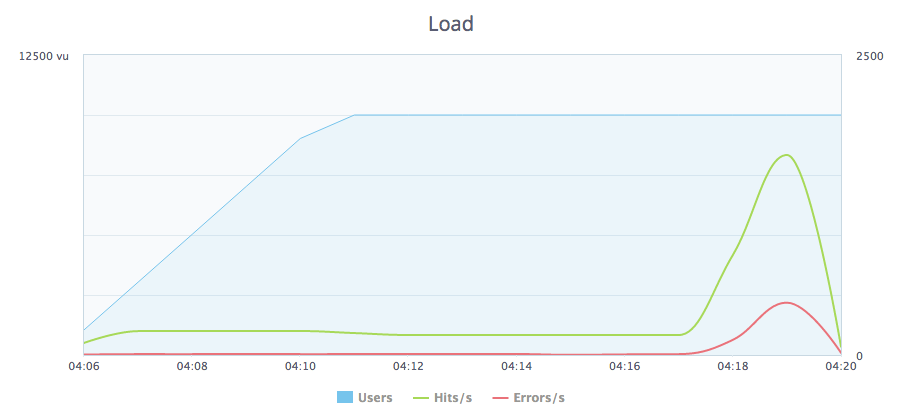

Back to topThe Impact of Throughput on Measuring Performance

What does this all mean for our load tests? What should we be working with to measure performance most accurately?

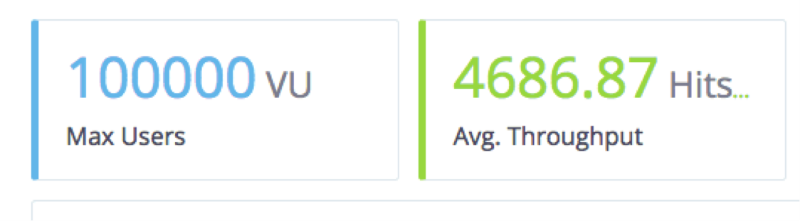

A good suggestion is to start with a set of scripts that represent the natural paths through the application that you already know users take. Then run those scripts at stepped intervals of load. For example, run a script with 10 users, then 100, then 1000 or more. Examine the Hits per Second for each run to get a sense of the levels of activity each set generates and you can probably extrapolate from there. Keep in mind it won’t be a linear scaling, but you’ll have some solid numbers to start from.

We’re often asked to help customers translate one value to the other, to calculate that X number of users = Y number of hits/sec. We can definitely help with that but only if we have some good data sets as baselines, since every app is different, and every script has multiple variables that change the equation.

If you’d like to work with us on that, let’s get together and run a few tests against your apps and see what we can come up with.

Questions? You can either contact us directly, or leave your comments below. Let us know what you think.

Related Resources

- How to Use JMeter's Constant Throughput Timer

- JMeter Ramp-Up Period: The Ultimate Guide

- How to Use the SetUp Thread Group in JMeter