Blog

January 25, 2023

API Performance Testing Scenarios and Vocabulary

API Testing,

Open Source Automation

APIs should be functionally correct, as well as available, fast, secure and reliable. For developers, API testing is essential not just for making promises to customers (and keeping them) but also for gathering data about API performance to improve development. API testing, however, especially when it comes to performance, is a vast field, and you might get lost in the jargon.

To help you navigate through your API performance tests, this article is an introduction to API testing terminology and scenarios. Let’s get started.

What is API Performance Testing?

Back to topAPI performance testing evaluates the overall performance of an application programming interface (API) under specific conditions. API performance tests can broadly be divided into two types: functional tests and load tests.

API Performance Testing Types

API Functional Tests

Functional API tests are the equivalent of unit tests for software: a way to ensure that the API returns the desired output for a given input. These tests can run in every environment, ranging from a developer's personal computer over staging environments to the final production system. It's recommended to run them to verify that deployment doesn't break functionality. When the deployment works, the tests should return the same results everywhere.

API Load Tests

Load API tests, on the other hand, typically run in production or an equivalent system. This is because non-functional constraints such as reliability and responsiveness look differently under various real-world conditions.

In load tests, APIs and consumers form client-server systems where multiple clients hit the same server at the same time, and the number of clients can drastically affect the behavior of the API. Therefore every load test works with virtual users, typically abbreviated to VU, which simulate different clients.

A single machine can simulate multiple VUs through multithreading, and in cloud-based test environments it is possible to run multiple machines concurrently to simulate an even higher number of VUs, and therefore test more significant load.

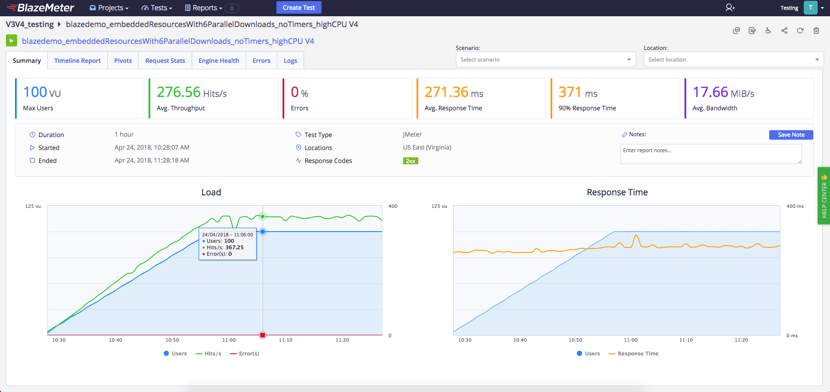

As an example, BlazeMeter supports up to 50 VUs on the free plan and tens of millions on paid plans. You can have 1000s of machines generating the load for your API server.

API performance testing works similar to website testing, though website tests may include client-side browser behavior whereas API tests only send network requests.

Load testing scripts can reuse functional tests, executed repeatedly and simultaneously. However, load tests provide a much broader range of KPIs than functional tests. Functional testers typically don't care about response times as long as there is no timeout, but load testers record them for each run to provide statistics such as average, mean, minimum, and maximum response times that the API provider may want to assert as part of their Service-Level Agreement (SLA).

Planning Load Test Scenarios

Load tests need proper planning. To administer a test, you need both a test script and one or more scenarios in which to execute it. You should ask yourself what you want to accomplish with your testing, and then select the approach and its parameters accordingly. It's also important to consider the use cases of your API and which load and changes in API request traffic you are likely to experience as a result.

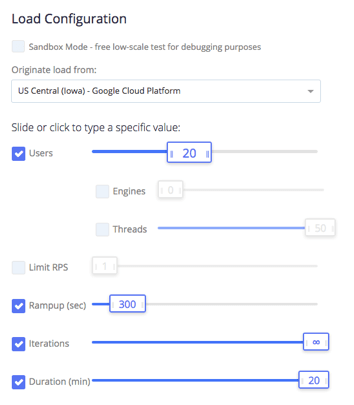

In BlazeMeter, you can create your test scenario in an open source testing tool like Apache JMeter™ or Gatling, and then configure the test attributes in the Load Configuration screen.

Load Tests

The term Load Testing refers to a standard version of the performance test. You specify the number of VUs, the test script and the time to run the test and all VUs hit your API continuously during the specified time while the testing tool records the performance. Then you can compare the performance metrics against the SLA.

Stress Tests

When performing stress testing, you start with a low to medium number of VUs and then, step by step, increase their count. This process is also called ramping up. The idea behind this testing approach is to continuously grow the load to find the point at which your API becomes either too slow, non-responsive or throws errors.

In BlazeMeter's Load Configuration you can select the number of users that you need and choose a long ramp-up time so you can observe the system as its load increases. The ramp-up starts with a single user and grows linearly until reaching the selected number of users.

Endurance Tests

The idea behind Endurance Testing is that sometimes a system seems to perform as expected under a particular load and then, all of a sudden, it stops working without any changes in traffic. Running out of server memory or disk space due to leaks or not cleaning up logs etc. is a common reason for it. To discover these errors before they happen, you can endurance test.

A endurance test is a load test that runs with a high but non-critical number of VUs for a long time, from several hours to multiple days.

Peak and Spike Tests

With Peak Testing you simulate your API at peak times. These are the times during which you expect a higher load. A peak test is typically shorter than an endurance test and often includes ramping up and ramping down to a higher pressure but not as huge as within a stress test. These test cases can be helpful to test the scalability of your API, especially if you run it on serverless or auto-scaling infrastructure.

The idea behind Spike Testing is to increase the load in short bursts, much faster than in a peak test, to see how the system performs to sudden increases who flatline again. You can configure peak tests in BlazeMeter by choosing a high number of users and short ramp-up times.

Endpoint vs. Scenario Testing

When doing software performance testing, you can call a single URL or multiple URLs in succession. The most straightforward approach is just testing single API endpoints. For a more realistic view, it can be helpful to write a few scenarios of multiple API calls that typically happen in succession.

To set up a functional endpoint test in BlazeMeter you can click the URL/API Test button under Create Test and enter your API operation endpoints. For scenario tests, you can use open source JMeter or open source Taurus and then upload the script to BlazeMeter. There is even a feature in Taurus to take a Swagger/OpenAPI file and build a test based on it.

Back to topOther Non-Functional API Tests

In this post, we have focused on API performance testing, but since I would like to give a broader overview of API testing, two more things deserve mentioning.

One is User Acceptance Testing (UAT), which is testing by the actual users. This is an important type of test because even the API with the best performance is pointless if it doesn't solve a problem the way the customer expects.

UAT tests are typically manual tests. When it comes to APIs, this means they are executed in clients like Postman or tested through integration in real-world apps. Some tools and approaches like Behavior-driven-development can be used for automation.

The other crucial non-functional constraint of an API is security. Especially when APIs pose a risk for personal privacy, you should not overlook API security testing. You can include API security testing into your scripts for functional or performance tests by adding API requests with invalid inputs, missing authentication details or to non-existing endpoints and assert that the API provides proper error handling and doesn’t break.

Back to topAPI Monitoring & Metrics

Last but not least, let’s look at API monitoring, which is related to testing. API monitoring typically includes simple uptime checks as well as performance or functional tests. For the latter two, you can use your existing test definitions to build your monitoring system. The difference between testing and monitoring is that testing is done as part of the development, build and deploy process, whereas monitoring is done in regular intervals to catch potential problems with the system while it's in production. The results from regular monitoring and analytics provide KPIs that you can leverage to optimize your API.

Popular API performance testing metrics include:

- Uptime or availability.

- Requests per minute (RPM).

- CPU and memory usage.

- Average and maximum latency.

- API retention.

Bottom Line

BlazeMeter provides advanced KPIs in real-time as well as storing results over time so they can be compared. Testers can drill down into results, share reports, and monitor their APIs across their teams.

Explore BlazeMeter API testing and monitoring today with our free trial.