Traditionally when we write tests in JMeter, we’re creating a test plan that will be looped by one set of threads. The threads are introduced, maybe over a ramp-up period. But when the ramp-up period is done and our threads are ending their respective first iteration, those threads start the whole process over.

This creates what is called “concurrency.” We can say that our application can support a certain number of users (provided the test goes well). This is a good simulation of a real-life scenario, but it fails in a couple of key areas.

First, it means we need to contrive methods that make our virtual users look more like real-world users (think using the HTTP Cache Manager to clear the cache at the start of each iteration). Second, if we want our group of users to do anything other than just repeat the loop at the same speed all the time, we are very limited by this setup.

This is where the Open Model Thread Group comes in.

Table of Contents

The Benefits of Open Model Thread Group

Open Model Thread Group is an experimental thread group that was added to JMeter with the introduction of JMeter 5.5. While it is not a new release per se, the benefits of JMeter’s Open Model Thread Group functionality are not being fully realized. With it, testing with JMeter means we can create a more realistic load configuration than ever before.

Customizing Thread Schedules

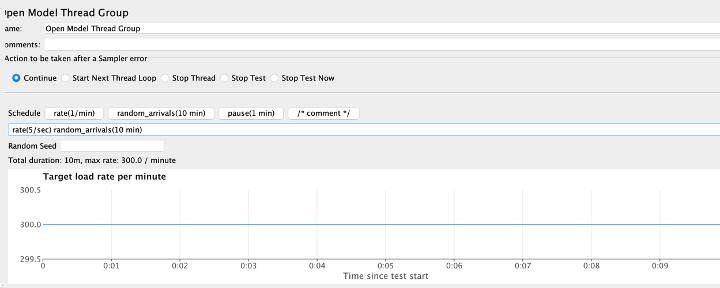

Open Model Thread Group works by allowing you to create a customizable schedule of threads. You might be wondering how that is different from the Ultimate Thread Group. The key distinction is that the Open Model Thread Group deals with arrivals rather than concurrency. If it deals with arrivals, then how is it different from the Arrivals Thread Group? The Arrivals Thread Group is missing the feature of scheduling threads rather than just the ramping up and holding of arrivals.

Think of the Open Model Thread Group as if the Ultimate Thread Group and the Arrivals Thread Group had a baby. It takes the best part of the Ultimate Thread Group — being able to schedule out your threads — and combines it with the realism of the Arrivals Thread Group. This results in your ability to dictate how quickly your virtual users are arriving and create a meaningful scenario of users producing load on your application without having to determine exactly how many users there are concurrently at any given time.

The concurrency then becomes a byproduct of the rate that users are coming to your application and how long they spend making requests to your services. It is a whole different way of thinking, but it creates new possibilities.

Simulating Major Traffic Spikes

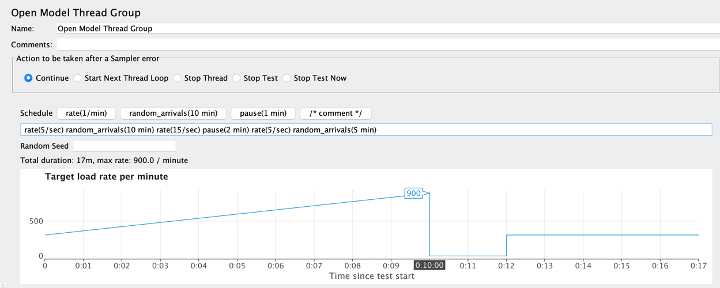

If you want to simulate a large traffic event or load spike on your app, it is not entirely realistic for that test to ramp up load dramatically in a matter of seconds. The linear nature of other thread groups may not give us a particularly accurate simulation of this scenario. So, think about this from an arrivals perspective and translate that to the Open Model Thread Group. We start with some base traffic on our application — a rate of five users per second for 10 minutes, for example. This will introduce new users to our flow for a specific amount of time and get our application warmed up to “normal” traffic.

Now add that big traffic spike. Bring the rate up to 50 users per second and we want that spike to be happening for just one minute before we let those arrivals drop off for another minute and go back to our normal traffic of five new users per second.

In the end our schedule looks like this:

rate(5/sec) random_arrivals(10 min) rate(5/sec) random_arrivals(15 sec) rate(50/sec) random_arrivals(1 min) rate(50/sec)random_arrivals(1 min) rate(5/sec) random_arrivals(10 min)

Think of the “rate” elements as being bookends to the “random_arrivals” elements. The random arrivals will determine a period of threads arriving randomly, which is targeting a load consistent with the rates that we have bookended it with. If the rate on either side of a “random_arrivals” elements is the same, then you will be keeping a consistent rate throughout that period.

By playing around with this, we have created a realistic load scenario in just a few minutes where we are not relying on estimating how many users will be on the system at any time. Instead, we’re allowing the concurrency on our application to come naturally from the rate at which users arrive to our system. How our system reacts to this load will create interesting concurrency results that we may not have been expecting.

Back to topBottom Line

The Open Model Thread Group functionality of JMeter allows for much more flexibility when it comes to creating realistic load configuration. By taking the best parts of other thread groups, we can now prepare apps for major traffic events properly.

JMeter 5.5 included some really great upgrades to the platform, and Open Model Thread Group is one of them that may be flying under the radar. Do yourself a favor and get started testing with BlazeMeter and JMeter for all your thread group needs.