Blog

May 15, 2025

Concurrent user testing tools offers many benefits to testers and developers looking to determine overall application performance, even under heavy loads. By detecting synchronization issues during operations that are happening simultaneously, concurrency testing helps teams:

- Optimize resource usage.

- Improve scalability efforts.

- Test earlier and minimize post-release downtime.

This tutorial will describe the steps that I take to do concurrency testing for JMeter scripts with BlazeMeter. I will give an overview of how teams can use our continuous testing platform to run a load test with 50K concurrent users, as well as show how it can handle bigger tests of up to 2 million users.

Concurrency Testing With JMeter & BlazeMeter: An Overview

The process of running concurrency testing with JMeter and then BlazeMeter is straightforward:

- Create a JMeter test script and test it locally

- Upload this JMeter script to a BlazeMeter performance test.

- Set the number of concurrent users in BlazeMeter, as well as duration and load generators.

- Set up a larger number of concurrent users and load generators for a longer time duration.

- Examine your results in BlazeMeter’s Engine Health tab within the platform.

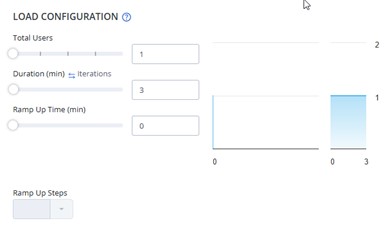

In the following concurrent user testing example, you will see that the number of users is originally set to 1, with a runtime of three minutes and zero for the ramp-up time. The number of users in the concurrent test case then grows to 300, with a duration of 25 minutes with an additional five-minute ramp-up time.

Now, let us take a closer look at each of these steps in depth.

Step 1: Write Your JMeter Script

To simplify the concurrency testing process, make sure to get the latest JMeter version from the JMeter Apache community and download the JMeter Plugins Manager.

Once you've downloaded the JAR file, put it into JMeter's “lib/ext” folder. Then start JMeter and go to the "Options" menu to access the Plugins Manager.

There are three main ways to get your script:

- Use the BlazeMeter Chrome Plugin to record your scenario.

- Using the JMeter HTTP(S) Test Script Recorder. This method essentially sets up a proxy so you could run your test through and record everything,

- Generating your test manually.

If you are using the BlazeMeter Chrome Extension or JMeter’s Test Script Recorder, keep in mind:

- You will need to change certain parameters such as Username & Password, or you might want to set a CSV file with those values so each user can be unique.

- To complete requests such as "AddToCart", "Login" and more, you might need to extract elements such as Token-String, Form-Build-Id and others using Regular Expressions, JSON Path Extractor, or XPath Extractor.

- Keep your script parameterized and use config elements such as HTTP Requests Defaults to simplify the switch between environments.

Step 2: Testing Locally Using JMeter

One you have created your test, you can start debugging your script with one thread, one iteration. To do this, you need to use JMeter’s View Results Tree element, the Debug Sampler, the Dummy Sampler and the Log Viewer opened (in case some JMeter errors are reported).

Go over all the scenarios (True and False responses) to make sure the script behaves as it should.

After the script has run successfully using one thread - raise it to 10-20 threads for 10 minutes and check the following:

- If you intended that each user be unique, is that the case?

- Are you getting any errors?

- If you are running a registration process, look at your backend – Are the accounts created according to your template? Are they unique?

- Do the statistics you see in your JMeter testing summary report make sense? You can find this out by looking at metrics such as average response time, errors, and hits/s.

Once your script is ready, clean it up by removing any Debug/Dummy Samplers and deleting your script listeners. The only exception to this step is for the “View Results Tree” listener, which should be disabled prior to your execution in BlazeMeter. Then, set the number of threads and users to “1”.

If you use Listeners (such as "Save Responses to a file") or a CSV Data Set Config, make sure you don't use the path you have used locally. Instead, use only the filename as if it was in the same folder as your script. If you are using your own proprietary JAR file(s), be sure to upload those as well.

If you are using more than one JMeter Thread Group (or not the default one), make sure to set the values before uploading to BlazeMeter.

Step 3: BlazeMeter Initial Testing

Now that your script is ready and you have successfully run your test locally, it is time to start concurrency testing your JMeter scripts in BlazeMeter. I recommend that you run your initial test with just one user for three minutes with no ramp-up time. If your application does not require you to interact with any of your internal resources, you can use any available public load generator.

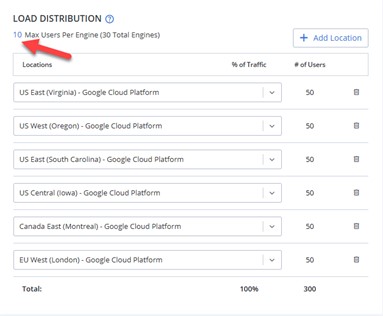

In the following image, you will see that the follow-up test run will have 300 users, a duration of 25 minutes, and a five-minute ramp up.

For the number of engines, you will select six public load generators. In the following example, this selection refers to public load generators. We have set each load generator to have a maximum number of 10 users per engine. Configuring the load this way will provide you with a very good representation of how the load is distributed throughout the public load generators.

This configuration for the BlazeMeter test case allows you to test your script and backend and ensure everything works well from BlazeMeter.

Common issues you may come across include:

- Firewall. Make sure your environment is open to the BlazeMeter CIDR list (which is updated from time to time) and whitelist the necessary addresses.

- Property issues. Make sure all your test files are present, such as CSVs, JAR, JSON, and User.properties.

- Paths. Make sure not to use any hard-coded paths and use Listeners. The CSV file needs to be at the same level as the JMeter script in the CSV Data Set Config instead.

If you are still having trouble, check the logs for errors. You should be able to download the entire log.

The initial BlazeMeter setup before getting into the 50,000 concurrent user run is defined as:

- Total Users: 300

- Duration (mins): 25

- Ramp up time (min): 5

- Ramp up steps: 5

- Load distribution

- 6 public load generators

- 10 max users per engine in each load generator

By configuring the ramp up time at 5 minutes with 5 ramp-up steps, you will have enough data during your ramp-up period to ensure the script is executing as expected and easily resolve any issues.

Look at the “Timeline Report/Request Stats” tabs in the BlazeMeter testcase report to see how the requests are performing.

You should also watch the “Engine Health” tab as part of your BlazeMeter test case to see how much memory & CPU was used. This tab will be especially helpful in the next stage of concurrency testing, when you will set up the number of users per engine.

Step 4: Determine the Number of Concurrent Users for Testing

Now that we are sure the script runs flawlessly in BlazeMeter, we need to figure out how many users we can apply to one engine.

If you can use the BlazeMeter test case data to determine that, great!

For the rest of us, here is how I recommend figuring out the number of users you can set without looking back on the BlazeMeter test case data.

Set your test configuration to:

- Number of threads: 500

- Ramp up: 30 minutes

- Ramp-up steps: 10

- Duration: 50 minutes

Use one load generator and set the maximum number of users to 500. Then, run the test and monitor your test's engine (through the “Engine Health” tab).

If your engine did not reach either a 75% CPU utilization or 85% Memory usage (one-time peaks can be ignored):

- Change the number of users to 700 and run the test again.

- Set the maximum number of users per engine in the load generator to 350. This change will distribute the load to two more engines evenly.

- Raise the number of users until you get either 1000 users (evenly split between two engines in the load generator) or 60% CPU or memory usage

If your engine passed the 75% CPU utilization or 85% Memory usage (one-time peaks can be ignored):

- Look at the point of time you first got to 75% and then see how many users you had at that point.

- This time, put the ramp-up you want to have in the real test (5-15 minutes is a great start) and set the duration to 50 minutes.

- Make sure you don't go over 75% CPU or 85% memory usage throughout the test.

You can also decrease 10% of the users per engine to be on the safe side.

Step 5: Set Up and Test 50,000 Concurrent Users (And More!)

We now know how many concurrent users we can get from one load generator or engine. At the end of this step, we will know how many users we can get through a combination of load generators.

The desired load generator configuration that we are looking for here will be 10 load generators with each engine running 500 users. This configuration will give us a total of 100 engines spread out to 10 load generators.

The maximum number of load generators per BlazeMeter performance test case is 10. The number of load generators that you can use is controlled by your BlazeMeter plan.

At this step, we will take the test that we built earlier and make two changes:

- Set the number of load generators to 10.

- Set the number of users per engine to 500, and the total number of users to 50,000.

Run the test for the full length of your final test, whether that is one hour or aspecified number of hours. While the test is running, go to the Engine Health tab and verify that none of the engines are passing the 75% CPU and 85% memory limit.

If the BlazeMeter test has not reached that limit (CPU/Memory), decrease the number of engines and run it again until the test case run is within these limits.

At the end of this process, you will know:

- Your maximum users per test case run.

- The hit/s per testcase run that you will reach.

You can also review the results of the test case run based on the response time, bandwidth, and more.

Additionally, you can change your BlazeMeter test case to use load generators located in different geographical regions, as well as different cloud providers, network emulators, and different parameters.

The BlazeMeter test case run “summary” tab will provide you with the metrics of the run.

Bottom Line

Running concurrency testing with JMeter as the basis is both straightforward and scalable with BlazeMeter. BlazeMeter is a 100% compatible with JMeter, enabling JMeter users to go beyond open-source and test concurrent users at enterprise scale in the cloud.

Want to easily run performance tests for up to 50,000 virtual users, and even up to 2 million virtual users? Try out BlazeMeter for free to get started.